Improving Result Experiences Through UX Research

Leveraging UX research to clarify test results, reduce confusion and support queries, while boosting engagement and helping users better understand their health status.

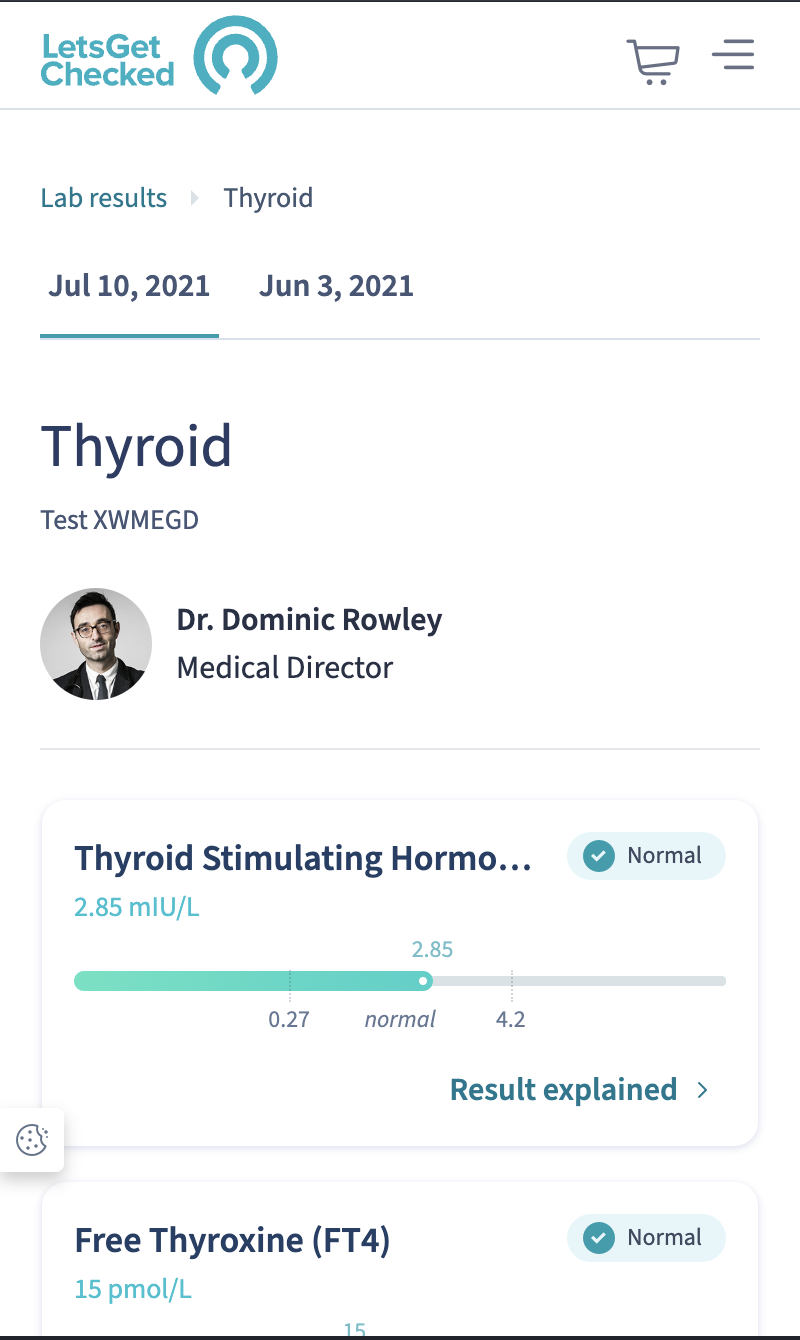

LetsGetChecked provides at-home health testing kits, enabling individuals to monitor their health privately and conveniently. Results are accessible via a secure online account, and their clinical team offers support, including consultations and prescriptions.

Unclear result narratives led to confusion, missed follow-up actions, and excessive customer support interactions. I spearheaded a research initiative at LetsGetChecked to address customer dissatisfaction with result explanations.

Project Overview

I used a mixed-method approach, leveraging surveys, customer reviews and in-depth customer interviews.

Through cross team collaboration we addressed key customer pain points.

User engagement increased by 63% in the first month.

The improved results narrative reduced inbound calls and thus freed up clinical resources.

Research Approach

To build a comprehensive understanding of the issue, I designed a mixed-method study combining quantitative and qualitative research:

Quantitative Insights:

Surveyed 2,000 customers to capture broad sentiment and expectations.

Analysed 12 months of 1-3 star Trustpilot reviews and inbound complaints related to result explanations.

Qualitative Depth:

Conducted 15 in-depth customer interviews to explore frustrations and unmet needs.

Interviewed 8 nurses to understand clinical perspectives on the most commonly used tests.

This multi-angle approach ensured we captured both user needs and operational context, allowing us to make data-driven recommendations.

Key Findings

Our research revealed three core problem areas:

1. Result Explanations

Customers struggled to understand biomarker roles, what affects their levels, and how “normal” their results were.

The lack of detailed explanations left users feeling uncertain and anxious.

2. Treatment Options & Next Steps

Users wanted guidance on how to improve their biomarker levels naturally.

Some biomarker results raised concerns, but users were unsure what was treatable and what required action.

Many users missed follow-up calls from the clinical team due to:

Unrecognised phone numbers flagged as spam.

No clear way to reconnect or access summaries of the calls.

3. Usability Issues

Many users were unaware they could tap biomarker names for more information.

Lab reports were available for download, but poor discoverability led to frequent confusion.

The process for ordering a replacement kit (when eligible) was unclear.

These insights highlighted a disconnect between customer expectations and the current experience, undermining trust and engagement.

Collaborative Solution Design

To address these issues, I led a design sprint workshop with designers, content strategists, nurses, and product managers. Together, we:

Refined biomarker explanations to provide clearer, more actionable insights.

Clarified next steps and treatment options with easy-to-follow guidance.

Improved app usability by making key features (biomarker details, downloadable reports, replacement kits) more discoverable.

The output was a set of updated designs and content, ready for usability testing:

Usability Testing

Objectives

To assess whether the updated design:

Increased user interaction with result explanations.

Improved understanding of biomarkers, their significance, and next steps.

Ensured users were aware of clinical support options if they had further questions.

Methodology

Participants: 30 users (15 per test group)

Prototypes Tested:

Version A: Existing results interface.

Version B: Updated results interface with improved explanations and usability enhancements.

Testing Approach:

Participants were randomly assigned one version.

Task success scores (0-2 scale):

0: Success

1: Partial failure/difficulty

2: User error

User ratings (1-5 scale, Strongly Disagree - Strongly Agree) for:

Trust in the results provided.

Clarity on next steps after receiving results.

Overall understanding of test results.

Results

Version B significantly reduced usability issues, allowing users to find information faster and report fewer unanswered questions.

On comprehension and next-step clarity, Version B outperformed Version A.

Trust scores were comparable across both versions, suggesting users trusted the data but needed improved delivery of insights.

Additional user feedback informed further refinements to design and content.

Impact & Live Performance Tracking

Following the launch of the updated experience:

User interactions with result descriptions increased by 63% in the first month.

Inbound calls about “normal” results decreased, indicating greater user confidence and self-sufficiency.

The clinical team reported fewer repetitive enquiries about biomarker explanations, improving operational efficiency.

These metrics underscored the tangible uplift in user satisfaction, aligning with LetsGetChecked’s mission to empower users with clear, actionable health insights.

By leveraging a research-driven, user-centred approach, we successfully improved result clarity, reduced customer support dependency, and increased trust in LetsGetChecked’s at-home health testing services.